LAMP

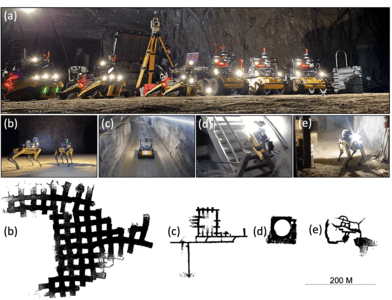

LAMP (Large-Scale Autonomous Mapping and Positioning for Exploration of Perceptually-Degraded Subterranean Environments) multi-robot LiDAR SLAM solution we deployed during the DARPA Subterranean Challenge. It is a computationally efficient and outlier-resilient centralized multi-robot SLAM system that is adaptable to different input odometry sources for operation in large-scale environments.

We open sourced the code along with raw and processed multi-robot datasets from scenarios we tested in when preparing for the challenge.

Please check out our repo.

The initial version of the system is described in (Ebadi et al., 2020) and the final system is detailed in (Chang et al., 2022).

- Chang, Y., Ebadi, K., Denniston, C., Ginting, M. F., Rosinol, A., Reinke, A., Palieri, M., Shi, J., A, C., Morrell, B., Agha-mohammadi, A., & Carlone, L. (2022). LAMP 2.0: A Robust Multi-Robot SLAM System for Operation in Challenging Large-Scale Underground Environments. IEEE Robotics and Automation Letters (RA-L), 7(4).

- Ebadi, K., Chang, Y., Palieri, M., Stephens, A., Hatteland, A., Heiden, E., Thakur, A., Morrell, B., Carlone, L., & Aghamohammadi, A. (2020). LAMP: Large-Scale Autonomous Mapping and Positioning for Exploration of Perceptually-Degraded Subterranean Environments. IEEE Intl. Conf. on Robotics and Automation (ICRA).